How do you reduce the carbon footprint of your code? Creating climate change software means building digital products that use less energy and produce fewer emissions. The internet currently consumes 466 terawatt-hours of electricity per year, and the average website generates 1.76 grams of CO2 per page view.

For a website with 10,000 monthly page views, that amounts to 211 kg of CO2 per year — roughly the same as driving a car for about 800 kilometers. Here are some tips from the developers’ community and MWDN specialists to help you reduce your software footprint!

Content:

- Decrease carbon emissions with the correct UX and design

- SEO best practices to create climate change software

- Web development best practices for minimal carbon emission software

- Server architecture for lower carbon footprint

There are three main areas where developers can work to cut their code’s carbon footprint:

- UX and design

- Front-end development

- Architecture choices

Before tackling these, check how much CO2 your website produces by visiting websitecarbon.com. Simply enter your site’s URL and get instant feedback.

Why does this matter? According to Greenpeace, the IT sector accounted for 7% of global electricity use in 2021. In the US, data centers consumed about 4.4% of the country’s electricity in 2023 — a figure expected to rise to 6.7–12% by 2028. By focusing on lower carbon emission software practices, you’re not just improving performance — you’re helping to reduce the internet’s environmental impact.

Decrease carbon emissions with the correct UX and design

Poor accessibility and UX are major contributors to websites with a high carbon footprint.

Improving usability and SEO can help reduce this footprint. Why? Because when a page is easy to find and simple to understand, users spend less time — and use fewer device resources — navigating your site.

How can you achieve this?

- Clear, concise content: Get your message across using the fewest words possible.

- Accessibility: Ensure your code complies with WCAG A and AA standards.

- Error-free code: Fixing code errors is a big step toward better accessibility and efficiency.

- Responsive design: Avoid fixed pixel units. Instead, use em, rem, and % to create flexible layouts that adapt to different screen sizes.

SEO best practices to create climate change software

Search engine optimization is crucial for making your pages easier to find. To improve your SEO, focus on the following:

- Use a canonical URL to prevent duplicate content issues.

- Write a clear title and description for each page.

- Implement a sitemap.xml to help search engines crawl your site.

- Add Schema.org structured data to provide rich snippets.

- Include Open Graph (OG) tags for better link previews on social media.

- Ensure there are no 404 or 500 errors — fix broken links and server issues.

- Use HTTPS to secure your site and build trust.

- Write semantic HTML for better accessibility and SEO.

- Create a logical page outline using proper heading tags (H1, H2, etc.).

But what if you want to not only attract users but keep them engaged for as long as possible?

Web development best practices for minimal carbon emission software

The performance of a website’s front end plays a significant role in its carbon footprint. In 2018, the average CO2 emission per page was 4g, but by 2021, that number had already dropped to 1.75g.

The biggest offenders are things that we can’t control. There are a few factors that contribute to high emissions, such as third-party libraries, media assets, JavaScript personalization, and tagging systems. These are often poorly optimized and may not be hosted on green servers, which can increase their carbon footprint.

What can you do? To reduce emissions, try to remove unnecessary third-party dependencies. Libraries like Lodash, jQuery, Modernizr, or Moment.js may not always be needed. If you do need to use them, choose modern libraries that support import/export functionality. With Webpack, you can load only the parts of a library you need, reducing the amount of code that gets compiled.

Also, be cautious with tools like Google Tag Manager and personalization systems. Many businesses use only a small fraction of their features, so limiting their use could help reduce unnecessary bloat and emissions.

Progressive enhancement vs graceful degradation

Progressive enhancement focuses on building a simple version of your website first, then adding advanced features based on the capabilities of users’ devices. This approach is more efficient, uses less code, and results in lower energy consumption. In contrast, graceful degradation builds a fully-featured website for the latest browsers, then adds extra code for older browsers, which leads to more bloat and higher energy use.

Media optimization: A critical best practice

Optimizing media is one of the most effective ways to boost performance and reduce emissions. The goal? Use the smallest file sizes, the right file types, and appropriate resolutions for each context.

For example:

- On mobile Chrome browsers, WebP images work well — they’re small, fast, and optimized for smaller screens.

- On larger screens with Safari, you might need different file types and higher resolutions.

While the concept sounds simple, every climate change software engineer knows the reality is far more complex. Different devices, browsers, and screen sizes require tailored optimizations.

And here’s the kicker — while we have cutting-edge technology like Google Stadia streaming 4K games at 60 FPS with minimal latency, we still struggle with websites loading 4MB PNGs that drag down performance.

Why the disconnect? Because building your own optimization and resizing pipelines is expensive and time-consuming. Many companies try, but they’re always playing catch-up.

That’s why SaaS platforms like Cloudinary or imgix are game-changers. They handle media management and delivery, optimizing files for you in real time. It’s not just about having a DAM system — it’s about ensuring media is delivered efficiently and sustainably.

The best part? These services are often more cost-effective than building custom solutions — and the performance gains are huge.

Lazy loading

Implement lazy loading for everything — including the images we just discussed. The idea is simple: if a user doesn’t need something at a particular moment, don’t load it. There’s no point in wasting resources.

For example, if you have a mega menu that only appears when a user interacts with it — and that menu contains images — don’t load those images when the page first loads. Why? Because most users might never even open that menu.

In fact, if your SEO is strong, users will probably land on the exact page they need through Google, so they won’t be digging through your navigation.

The bottom line: don’t waste resources — only load content when it’s actually needed.

For example, Pinterest uses the main color from an image as the background for its loading placeholders:

Lazy-load everything — images, videos, iframes, and third-party libraries. By third-party libraries, we mean things like YouTube embeds or systems like Bazaarvoice that handle product reviews, ratings, and user comments.

If you load these libraries right away — for example, by putting their scripts in the header — the page will load all those scripts immediately, adding unnecessary weight and slowing things down.

Instead, delay loading these elements. If the comments section, for instance, is at the bottom of the page, wait until the user starts scrolling down. When they get close to that section, trigger the content to load.

After all, there’s a good chance some users will never scroll that far — so why load something they might never see?

Be cautious of GDPR requirements. In some cases, you can’t lazy load certain scripts because they need to be loaded to verify if a user has opted in or given consent for cookies.

Server architecture for lower carbon footprint

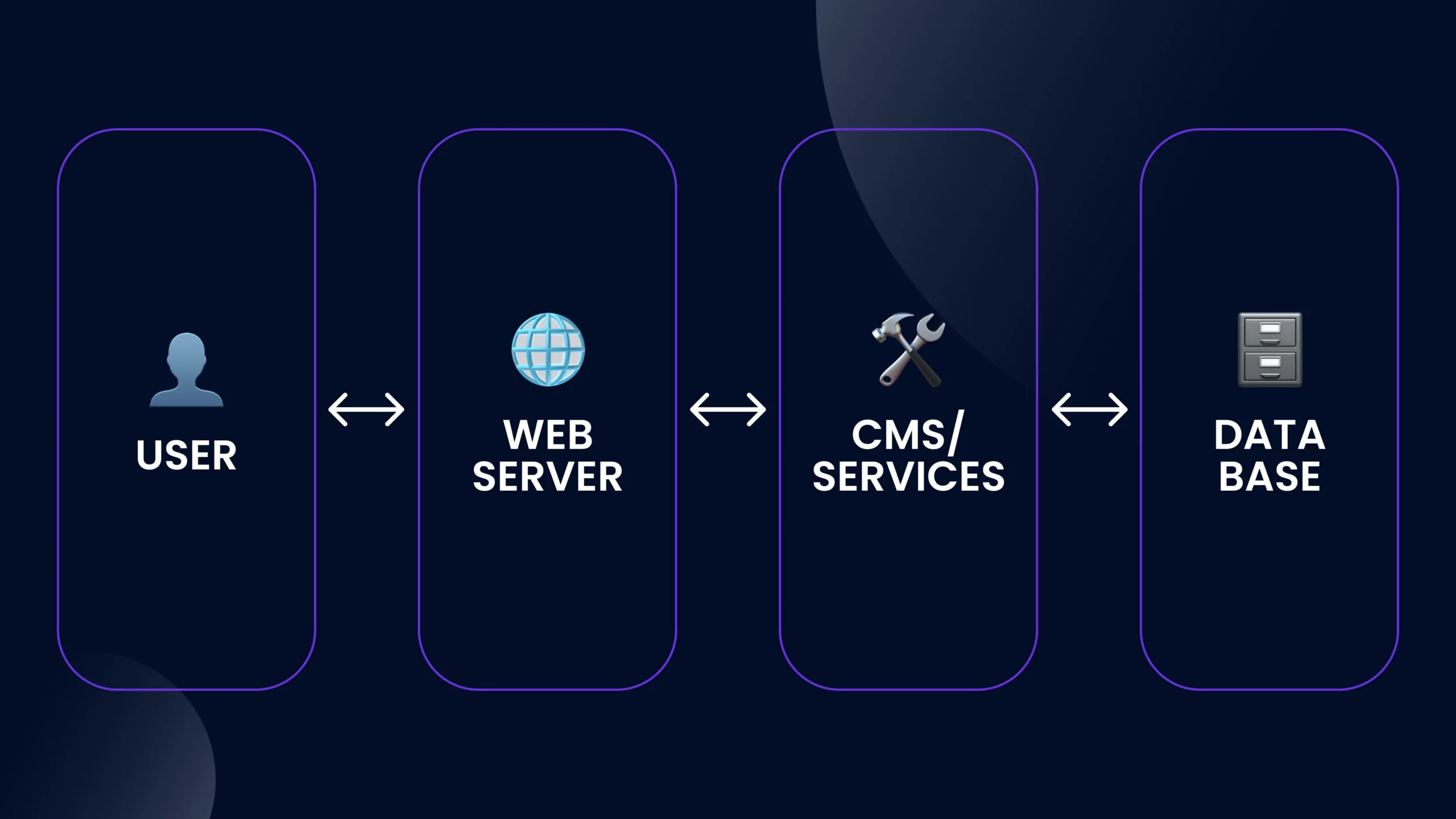

Our current monolithic model has a significant environmental impact. In this setup, everything—such as the web server, CMS, services, database, and front-end—is bundled together in one large package. While this approach is simple and straightforward, it can be inefficient in terms of resource use, leading to higher energy consumption and carbon emissions. The process looks like this:

There’s a lot of ongoing activity, with all components always active and constantly in sync with each other. This system works as a single entity that generates HTML pages. However, there are several issues with this setup:

Cost. Because everything is included in this “big package” and always running, the system relies on expensive components that are constantly active, even for every page load. This leads to higher operating costs.

Performance. While monolithic systems can perform well, they tend to be slower due to their complexity. Every time you interact with the site, the request has to go all the way through the system, retrieve the necessary data, and send it back. Even with optimization, this process requires energy.

Security. Large systems with so many interconnected components create a bigger attack surface. When vulnerabilities exist, an attacker might be able to access multiple parts of the system, especially if all parts are running simultaneously. Consider WordPress, for example: while improved, it’s still a monolithic system and, historically, one of the most targeted platforms. The constant activity of these systems makes them more vulnerable and power-hungry.

Scalability. Scaling a monolithic system requires significant resources and expertise. If your site needs to handle more traffic, you’ll likely need to pay a high price to scale it through third-party services. The process is complex, expensive, and difficult to manage.

A lot of businesses are now using carbon emissions reporting software to get a better handle on their environmental impact. It tracks how much energy monolithic systems use and shows the carbon footprint they’re leaving behind. With this data, companies can spot the problem areas and figure out how to make their architecture more eco-friendly.

Solution 1: JamStack

Jamstack is not the savior of everything, but for a big chunk of the websites, it can really help. Jamstack stands for:

JavaScript

APIs

Markup.

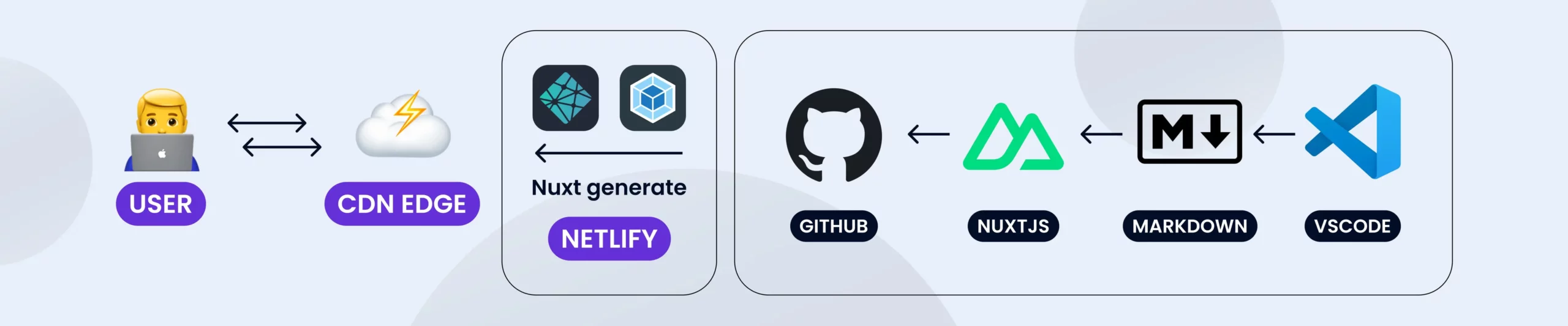

Here’s the simplest way to understand it: You begin by building your code locally on your machine or through a continuous integration (CI) system. Once your code is ready, you push it to your version control system, like Git. All of your content and data are stored there, and during the build process, the system compiles everything into a static website.

The key difference here is that the dynamic parts—like keeping track of the state and handling complex tasks—are only needed during the build process. Once the site is built, it generates a set of static files: HTML, CSS, JavaScript, images, fonts, and so on.

After that, these static files are deployed to a Content Delivery Network (CDN) edge. This means there is no need for an origin server to deliver content to users because everything is already static and pre-built. The CDN simply serves the static files directly to users, making the website faster and more efficient.

When you hit “save” on your website, the changes are pushed to GitHub (or any other version control platform like GitLab or Bitbucket). From there, a service like Netlify (or alternatives like Vercel, Cloudflare Pages, or AWS Amplify) gets notified about the update.

Netlify then says: “Hey, there’s a new change — let’s rebuild the front end and pull in all the latest data.” It compiles your website, generating a bundle of static files — HTML, CSS, JavaScript, images, and fonts — and distributes them across multiple servers around the world, typically on a Content Delivery Network (CDN).

What does this mean for scalability? Since the site consists of pre-built static files, scaling is simple. You don’t have to spin up extra servers or maintain a running origin server — you just distribute those static files to even more locations if needed. There’s no extra cost for keeping services running because there are no active backend processes. Everything is decentralized, and the complexity happens during the build process — where data is fetched, content is generated, and files are created.

Can most websites use this approach? Yes! Many websites could run on Jamstack, especially when combined with SaaS (Software as a Service) providers. For example:

- If you want content editors to manage pages without dealing with code, you can use a Headless CMS (like Contentful, Sanity, or Strapi). Editors work with a familiar interface, and when they hit “publish,” it triggers the build process — regenerating the static site with the latest content.

- If your site needs user logins, you can integrate identity providers (such as Auth0, Clerk, or Firebase Authentication). This keeps authentication separate from your main site, meaning you only scale the identity service if traffic increases — not the entire website.

The key advantage of this architecture is that you only scale the parts you actually use. If you don’t need a certain feature, you’re not paying for unused server time. If one part of your site — like authentication — gets heavy traffic, you scale just that part, not the whole system.

Solution 2: Serverless

Serverless architecture builds on the idea of not having a central origin server. Instead of running everything on one big, always-on server, your system is broken down into microservices — small, independent functions that run in the cloud.

These functions are stateless — they take an input, process it, and return an output, without keeping track of anything beyond that single task. They don’t “remember” anything or stay active when not in use.

The key benefit? They only run when needed.

- If no one is using a particular function, it stays off — so you’re not paying for idle server time.

- When someone triggers an action (like submitting a form or processing a payment), the function activates, does its job, and then shuts down again.

- If there’s a spike in demand — for example, a lot of users performing the same action at once — only that specific function scales automatically without affecting the rest of the system.

You can link these microservices together and orchestrate them — meaning you decide how they work together and in what order — creating a fully functional, cloud-based system without relying on a single, always-on server.

This flexible, cost-effective setup not only saves money but also cuts down on energy use. Plus, some companies are now pairing serverless tech with climate change modelling software to track the environmental impact of their cloud infrastructure — helping them spot inefficiencies and build greener, more sustainable apps.

Over the past five years, serverless architecture has seen significant advancements. The core principles remain consistent: breaking down applications into stateless microservices that activate on demand, enhancing flexibility, cost-efficiency, and scalability.

Serverless computing has expanded beyond early adopters, with a notable rise in usage across various sectors. Recent data indicates that over 70% of AWS customers and 60% of Google Cloud customers utilize serverless solutions, with Azure adoption at 49%.

The serverless architecture market has experienced rapid expansion, valued at over USD 19.42 billion in 2024, and is projected to exceed USD 383.54 billion by 2037, with a CAGR of over 25.5% between 2025 and 2037.

Developers now have more tools and services at their fingertips, making it easier to set up serverless architectures. This gives them more flexibility to create solutions that fit their app’s specific needs.

For example, businesses increasingly pair serverless computing with carbon emissions management software to track and reduce the environmental impact of their cloud operations. Since serverless only uses resources when triggered, combining it with tools that monitor and manage carbon output helps companies align their tech strategies with their climate goals.

As serverless tech keeps growing, there’s also a bigger push to boost security and make sure different services and platforms work smoothly together. This helps tackle past issues and makes serverless apps more reliable and solid.

Server parks and server farms

Server parks, often hidden underground, use a ton of energy — from cooling systems and security to backups and redundancy. It all adds up.

By default, CDNs and server farms don’t have a low carbon footprint. If you need to use them, look for green hosts or green CDNs. This doesn’t mean their servers are magically eco-friendly, but many offset their carbon emissions — like investing in renewable energy or carbon capture projects — to become carbon neutral or even carbon positive.

Some companies are taking it a step further by using carbon emissions software to track and reduce their environmental impact. So, when picking a provider, go for those making real moves to cut their carbon footprint — and push your clients to do the same.

Summing up

It’s simpler than it sounds. Focus on the basics: follow good UX and SEO practices, optimize performance, cut unnecessary bloat, and handle media efficiently. Pick the right architecture and go with a green host or CDN — and you’re already on the right track.

Need a developer or designer to help you build low-carbon digital products? Reach out to us — we’ll connect you with top specialists anytime and anywhere!