AWS is known as one of the first major players in cloud computing and cloud services. While the concept of the cloud started growing in the early 2000s, Amazon officially launched AWS in 2006 with just three services: storage buckets (S3), compute instances (EC2), and a messaging queue (SQS). Want someone to take care of it for you? At MWDN, we can help you hire cloud engineers and other cloud specialists. Keep on reading for more information on cloud services!

Content:

- AWS for robotics, IoT, and space

- Quantum and future tech in the cloud: Amazon Braket

- Core compute services

- Going serverless

- Container management

- Cloud storage solutions

- Database services

- Analytics and big data

- Machine learning and AI services

- Security and identity

- Infrastructure as Code and frontend integration

- What is a software factory?

- Tips for new AWS users

Soon after Amazon, Microsoft launched Azure, and Google followed with Google Cloud Platform (GCP). Today, AWS remains the top cloud provider, with Azure and GCP right behind. Other platforms like IBM Cloud, Oracle Cloud, and Alibaba Cloud are also widely used in different regions.

Over the years, AWS has grown from just three services to more than 200. Now, they cover everything from AI and machine learning to containers and serverless computing. Some services overlap, which makes the platform powerful, yet overwhelming at times.

To help you understand this vast ecosystem, we’ve put together a list of almost 40 AWS services, and we bet there are a few you’ve never heard of!

AWS for robotics, IoT, and space

If you work on robotics, AWS has RoboMaker. This service lets you simulate, test, and fine-tune your robot applications in a scalable cloud environment without needing a physical robot for every test. It supports frameworks like ROS and makes development much faster and more flexible.

Once your robots are out in the real world, like in homes, warehouses, or hospitals, you can use AWS IoT Core to securely connect them to the cloud. This lets you collect real-time data, push software updates, monitor device health, and manage fleets remotely, all without interrupting their operation.

And if your project is even more ambitious, say, involving satellites, you can turn to AWS Ground Station. It connects your satellite directly to the AWS global infrastructure via a network of ground antennas, so you can process and analyze satellite data without needing to build and maintain your own ground station.

Quantum and future tech in the cloud: Amazon Braket

If you’re curious about the future of computing, Amazon Braket is AWS’s gateway into the world of quantum computing. It lets researchers, scientists, and developers explore quantum algorithms by providing access to actual quantum computers from providers like IonQ, Rigetti, and Oxford Quantum Circuits, as well as high-performance classical simulators.

With Braket, you can experiment with quantum circuits, test hybrid quantum-classical algorithms, and run jobs using a familiar Python-based SDK, all without needing to own or maintain quantum hardware. It’s a great way to explore how quantum computing could one day solve problems too complex for traditional systems, such as in cryptography, drug discovery, or advanced optimization.

Even if you’re not a quantum physicist, Braket lowers the barrier to entry, giving developers a chance to get hands-on with one of the most promising technologies of the future.

Core compute services

But most developers turn to the cloud to tackle real-world challenges, which is why it makes sense to start by looking at the core computing services.

Elastic Compute Cloud (EC2)

One of the original AWS offerings, Elastic Compute Cloud (EC2), is still one of the most fundamental building blocks of the platform. It allows you to create virtual machines (instances) in the cloud, where you can customize the operating system, memory, and computing power.

EC2 is like renting an apartment in the cloud, where you pay for the space by the second.

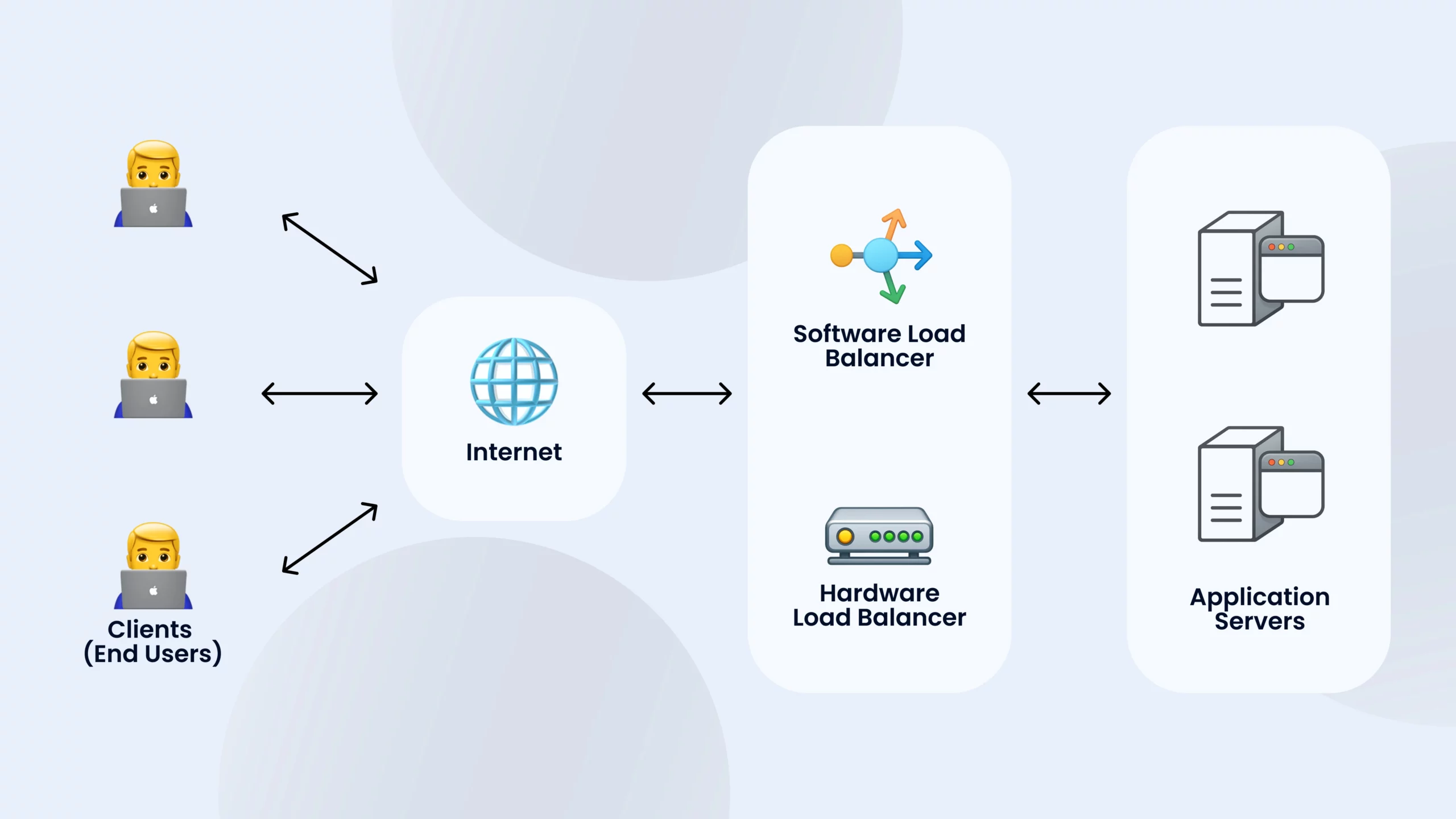

A common use case for EC2 is hosting web applications. However, as your app grows, you’ll often need to distribute traffic across multiple instances to handle increased load. For these cases, Elastic Load Balancing (ELB) might be useful. It automatically distributes incoming traffic across multiple EC2 instances, providing high availability and reliability for your application.

CloudWatch and Auto Scaling

AWS introduced CloudWatch, a service that collects logs and metrics from each EC2 instance. The data gathered by CloudWatch can then be passed to Auto Scaling, which lets you define policies that automatically create new instances as traffic increases or when utilization thresholds are met.

These tools were revolutionary at their time, simplifying infrastructure management by making scaling and resource allocation automatic.

Elastic Beanstalk

While EC2, ELB, and CloudWatch made managing applications easier, developers still desired an even more streamlined approach. Thats why Elastic Beanstalk was introduced in 2011.

Elastic Beanstalk provides a higher level of abstraction, helping developers deploy their apps (especially Ruby on Rails apps) without worrying about the underlying infrastructure. Here’s how it works:

- Choose a template.

- Deploy your code.

- Let AWS handle the scaling, load balancing, and infrastructure management.

This service, often referred to as Platform as a Service (PaaS), allowed developers to focus on their application code, rather than worrying about infrastructure. However, as of July 18, 2022, AWS retired all platform branches based on the older Amazon Linux AMI (AL1), encouraging developers to migrate to newer platforms.

Lightsail: Simplifying the process further

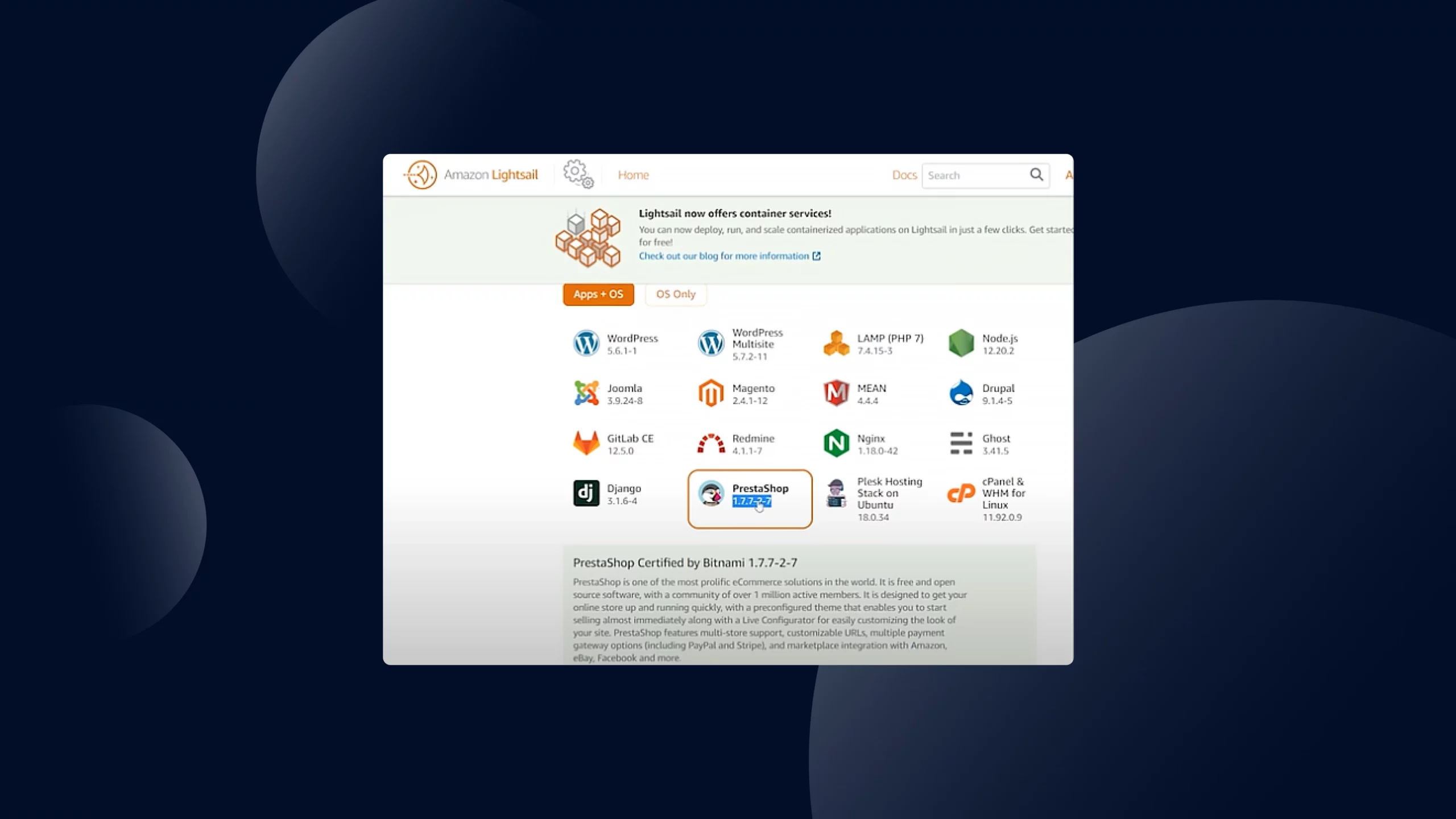

For those who don’t want to deal with any infrastructure management at all, Lightsail offers an even simpler alternative. It’s perfect for users who want to deploy a service like WordPress with minimal configuration.

Lightsail allows you to:

- Point and click to deploy applications.

- Worry even less about the underlying infrastructure.

It’s a “set it and forget it” approach for cloud computing, where you can focus entirely on your application while AWS handles the rest.

Going serverless

Going serverless

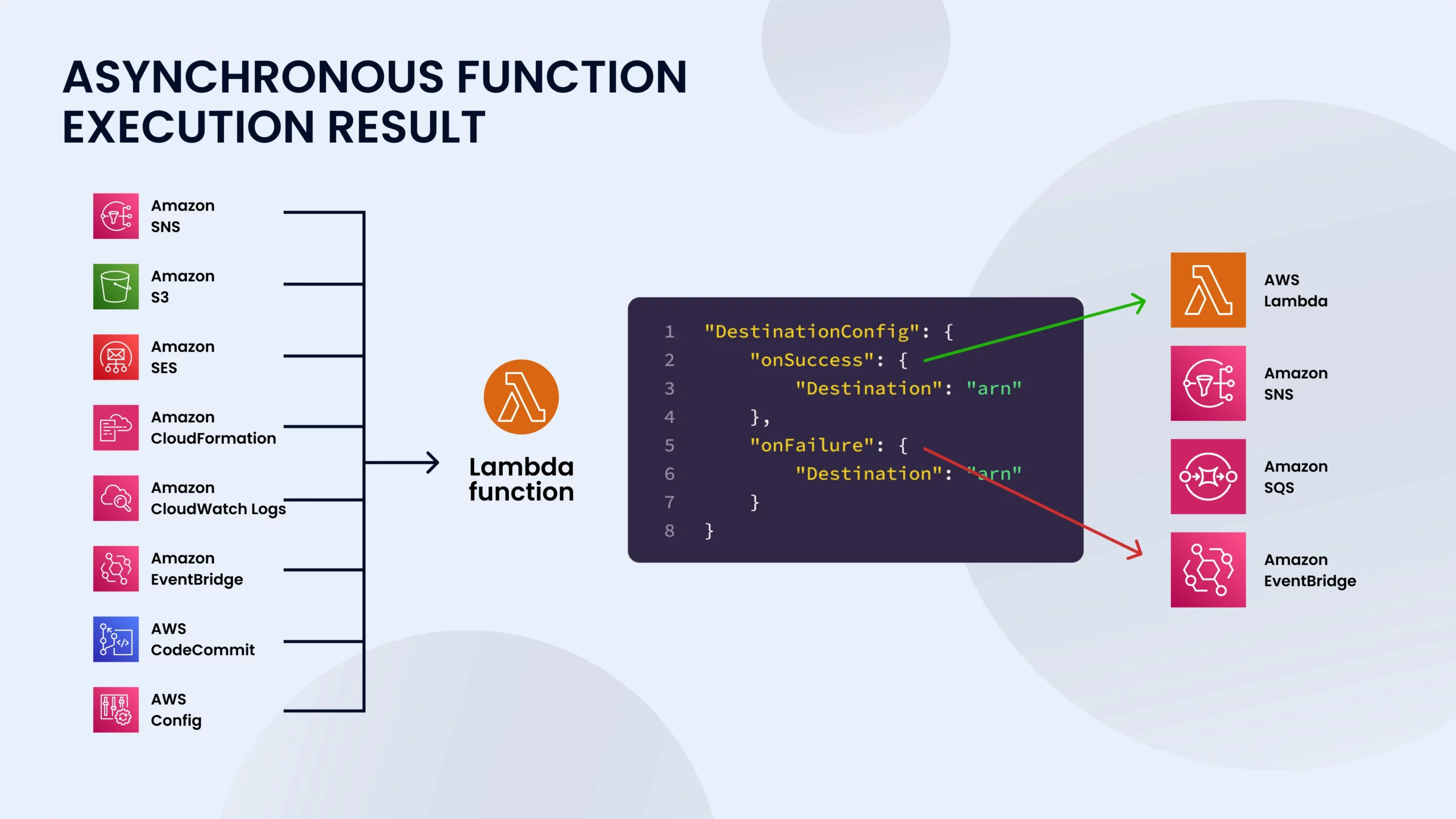

Many computing tasks are ephemeral, meaning they don’t require any persistent state on the server. So, why bother deploying a server for such code? In 2014, AWS Lambda introduced a game-changing approach: Functions as a Service (FaaS), or serverless computing.

Serverless computing with AWS Lambda

With Lambda, you simply:

- Upload your code.

- Choose an event to trigger when the code should run.

From there, AWS handles traffic scaling and networking automatically, all in the background. Unlike traditional servers, with Lambda, you only pay for the exact number of requests and computing time your code uses, making it an incredibly cost-efficient solution for many workloads.

Serverless Application Repository

If you’d rather not write your own code, you can use the Serverless Application Repository to find pre-built functions. These functions can be deployed with a single click, saving you time and effort while still providing the benefits of serverless computing.

AWS Outposts for enterprises

But what if you’re a large enterprise with a bunch of your own servers? You don’t have to throw everything out just to use AWS services. Choose AWS Outposts instead!

With Outposts, you can rent AWS APIs on your own infrastructure. This means you can keep your existing hardware while leveraging the full power of AWS’s services, so it becomes a perfect solution for businesses looking to modernize without abandoning their on-premises servers.

Container management

Many apps today are containerized using Docker, which makes them portable across multiple clouds and computing environments with minimal effort. This is an essential part of modern app development and deployment.

To run a container, the first step is to create a Docker image and store it somewhere. Elastic Container Registry (ECR) is AWS’s solution for uploading and storing Docker images. Once your image is stored in ECR, tools like Elastic Container Service (ECS) can pull the image and run it on virtual machines.

Elastic Container Service (ECS)

ECS is an API that helps you start, stop, and allocate virtual machines for your containers. It also allows you to connect containers to other AWS services, like load balancers, for better traffic management. It’s a versatile tool, but for some companies, more control over scaling is needed.

Kubernetes with ECS

For businesses that need more control over how their applications scale, ECS can also run Kubernetes, an open-source container orchestration tool. Kubernetes gives developers more fine-tuned control over how containers are deployed, scaled, and managed.

AWS Fargate: Serverless containers

In other scenarios, you might prefer a more automated approach where your containers behave like serverless functions. That’s a case for AWS Fargate. Fargate allows you to run containers without the need to allocate EC2 instances manually, so you’re free from managing the underlying infrastructure. It automates much of the container deployment and scaling process, so it’s an ideal solution for serverless workloads.

AWS App Runner: Simplified deployment

If you’re already working with a containerized application, the easiest way to deploy it to AWS is App Runner. Released in 2021, App Runner allows you to point to your container image, and it takes care of the rest, handling the orchestration and scaling automatically.

Cloud storage solutions

Running an application is only half the battle. Storing your data in the cloud is equally important. AWS offers some of the best solutions for cloud storage.

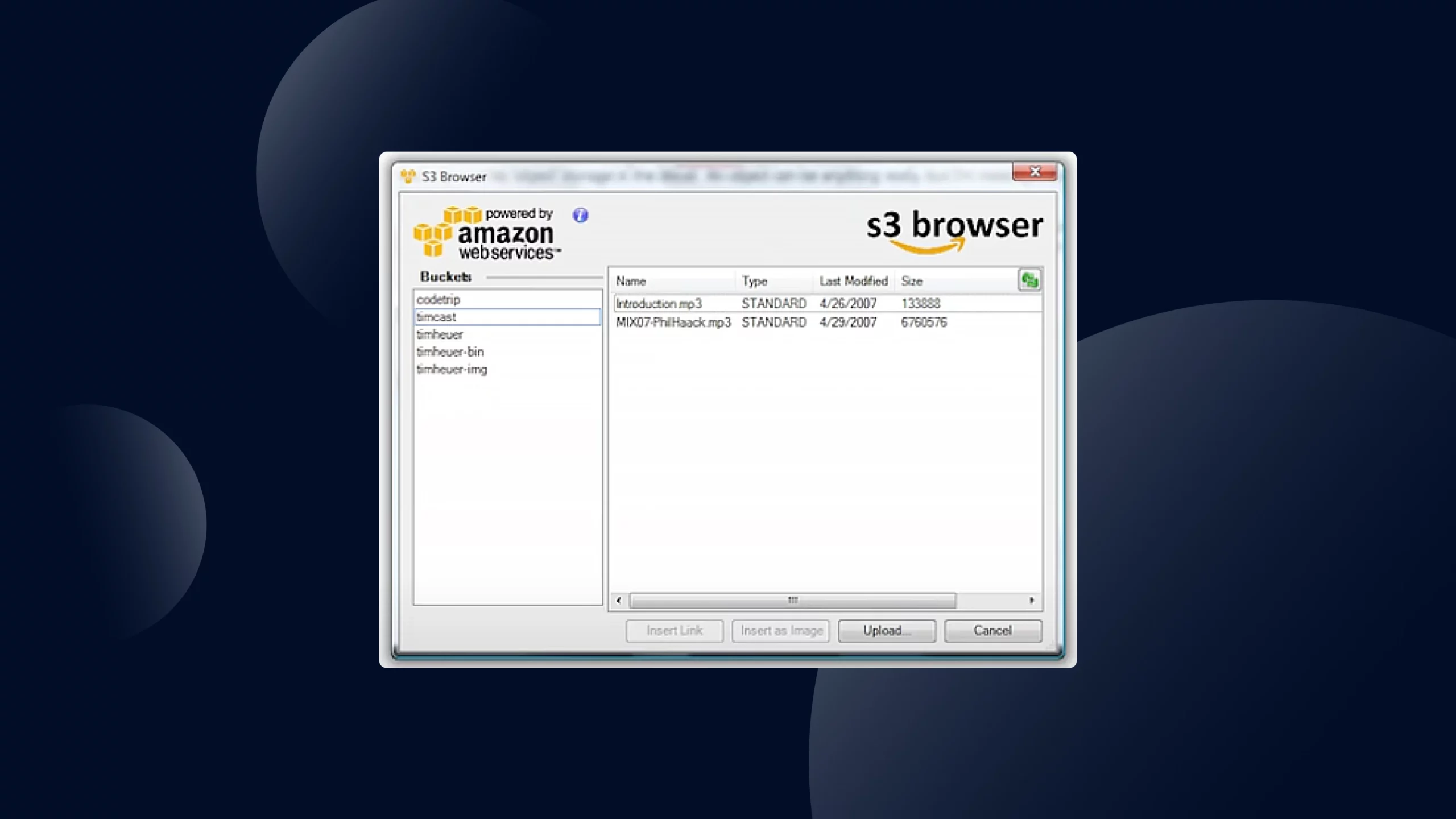

Amazon S3: Simple Storage Service

The first product AWS ever offered was Simple Storage Service (S3), and it remains one of the most widely used cloud storage solutions today. S3 is designed to store any type of file or object, from images to videos to documents. It’s built on the same reliable infrastructure that powers Amazon’s e-commerce platform, ensuring high durability and availability.

S3 is perfect for general-purpose file storage. It provides fast access and scalability, making it suitable for everything from website assets to backup storage for applications. However, not all files need to be accessed frequently, which is where AWS Glacier provides an option for lower-cost storage.

AWS Glacier: Low-cost archival storage

If you don’t need to access your files often, Glacier offers a low-cost alternative for archiving them. Glacier has much lower storage costs than S3, but the tradeoff is higher latency, as retrieving data from Glacier takes longer than from S3. It’s ideal for data you don’t need to access regularly but still want to keep safe for compliance, backups, or long-term retention.

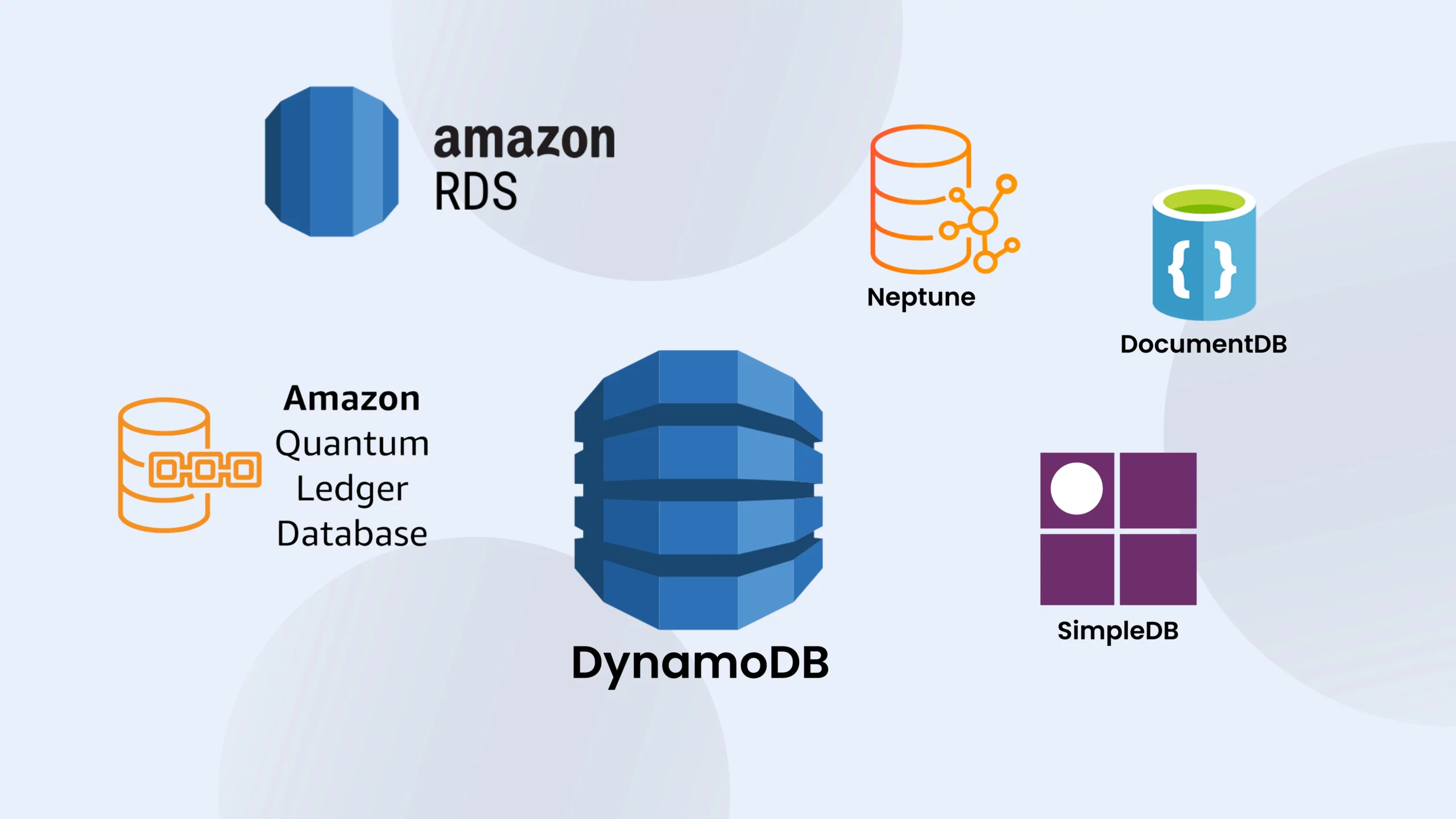

Database services

A core component of building cloud applications are databases and AWS offers a lot to suit different needs. These services fall under the umbrella of cloud data management services, helping businesses store, manage, and access data.

SimpleDB: The first step

The very first database service offered by AWS was SimpleDB, a general-purpose NoSQL database. As the name suggests, it was simple. A bit too simple for most real-world use cases. It lacked many of the features developers needed as applications became more complex.

DynamoDB: Scalable and fast

To deal with these limitations, AWS introduced DynamoDB, a high-performance document database that easily scales horizontally. It was designed for speed and cost-efficiency and offered lightning-fast read and write performance. It’s a great choice for workloads that need consistent performance at scale, such as real-time bidding systems or gaming backends.

DynamoDB plays a strong role in the cloud based data management services market, offering a cost-effective alternative to more traditional databases, especially for applications with unpredictable traffic patterns.

However, DynamoDB isn’t ideal for apps that require complex relationships between data. In other words, it’s not built for relational models. If you’re coming from a MongoDB background, you might be interested in DocumentDB.

DocumentDB: MongoDB-compatible (sort of)

Amazon DocumentDB is a document database with an API designed to be compatible with MongoDB. It’s technically not MongoDB. It was created as a response to changes in MongoDB’s open-source license. AWS engineered DocumentDB to mimic MongoDB’s behavior with one-to-one API compatibility, allowing developers to run MongoDB-like applications without licensing conflicts. It’s a solid option within the growing market of enterprise data management cloud services, especially for companies already invested in MongoDB-based architecture.

RDS: Managed SQL databases

If you prefer traditional relational databases, AWS offers Amazon RDS (Relational Database Service). RDS supports popular engines like:

- MySQL

- PostgreSQL

- MariaDB

- Oracle

- SQL Server

With RDS, AWS handles the heavy lifting: backups, patching, scaling, and replication are all managed for you. It’s a classic example of cloud managed data center services, where infrastructure and maintenance are handled by the cloud provider, freeing developers to focus on their apps.

It’s also worth noting that if you’re comparing platforms like Jira Service Management Cloud vs Data Center, this shift toward managed services (like RDS) shows how cloud models can reduce operational overhead and increase flexibility in enterprise settings.

Neptune: For Highly Connected Data

For workloads involving highly connected data, like social networks, recommendation engines, or fraud detection, AWS offers Neptune, a graph database designed to model complex relationships. It supports graph models like Gremlin and SPARQL, and it’s optimized for performance in graph-based queries that would be slow and clunky in traditional relational databases.

QLDB: Immutable ledger for trusted records

If you need a cryptographically verifiable transaction log, Amazon QLDB (Quantum Ledger Database) provides an immutable, append-only ledger. It’s like a centralized version of blockchain: great for use cases like audit logs, supply chain tracking, and financial records where data integrity and traceability are critical.

This aligns with the trend of businesses exploring more advanced Oracle data management cloud services to secure their most sensitive transaction records.

AWS and Oracle

Speaking of Oracle. AWS also supports the use of Oracle databases through services like RDS and more specialized integrations. For organizations that rely on Oracle’s enterprise tools, services like the Oracle customer data management cloud service and Oracle data management cloud services can be extended or migrated to AWS, helping businesses modernize without losing their legacy investments.

This interoperability is a great example of how AWS contributes to a broader, evolving ecosystem of enterprise data management cloud services, letting businesses run hybrid systems or fully cloud-native applications with their preferred tools.

Choosing the right tool

What fits you, won’t fit us, as there’s no one-size-fits-all when it comes to databases, but AWS makes it easy to pick the right tool for the job:

- SimpleDB: For ultra-basic, lightweight NoSQL use cases.

- DynamoDB: For fast, scalable NoSQL applications.

- DocumentDB: For MongoDB-like apps without licensing worries.

- RDS: For traditional SQL databases with full management.

- Neptune: For graph-based data and connected relationships.

- QLDB: For immutable, verifiable transaction records.

Analytics and big data

Collecting data is one thing, but analyzing it is true magic. To do that effectively, you first need a place to store and organize your data.

Redshift: The Go-To Data Warehouse

A popular option for structured analytics is Amazon Redshift, AWS’s fully managed data warehouse. It’s designed to help organizations “shift” away from legacy systems like Oracle. Hence the name Redshift.

Redshift is the best at combining data from multiple sources across an enterprise, allowing you to analyze it all in one centralized location. This structure is especially useful for:

- Business intelligence

- Reporting

- Machine learning

With all your data in one place, your organization can generate insights faster and train predictive models more efficiently. Redshift is a strong player in the cloud based analytics service space, as it offers scalability, speed, and integration with the rest of the AWS ecosystem.

Lake Formation: Unstructured data, organized

But not all data fits neatly into rows and columns. If you need to handle large volumes of unstructured or semi-structured data, try out AWS Lake Formation.

With Lake Formation, you can build a secure, scalable data lake using services like S3 as the storage layer. Data lakes are useful when:

- You have diverse data formats (logs, images, video, JSON)

- You want to integrate with machine learning and big data frameworks

- You need to query across structured and unstructured data together

Often, organizations use data warehouses and data lakes together, giving analysts and data scientists access to the broadest possible set of information. Combining both is key in delivering full-stack analytics cloud services.

Real-time data: Streaming with Kinesis or Kafka

Sometimes, analyzing data after the fact isn’t fast enough. If you need to process and visualize real-time data, Amazon Kinesis is the tool for the job. It can capture data from:

- Application logs

- IoT devices

- Clickstreams

- Infrastructure metrics

Once captured, data can be routed to your favorite business intelligence tools for immediate visualization and decision-making.

If you’re looking for an open-source alternative, Apache Kafka is widely used in the industry. AWS offers Amazon MSK (Managed Streaming for Kafka), giving you all the power of Kafka with the convenience of AWS management.

Massive-scale processing: EMR with Apache Spark

For workloads that require big data processing at scale, Amazon EMR (Elastic MapReduce) lets you run frameworks like Apache Spark, Hadoop, and Presto.

These tools are designed to split large data jobs into parallel tasks, making it possible to process terabytes or even petabytes of data. EMR is essential when you need cloud scale analytics with Azure data services-like performance, but within the AWS environment.

It’s a powerful option for organizations looking to do more with their data, whether for modeling, log analysis, or training machine learning systems.

AWS Glue: ETL made easy (no code required)

Not every developer wants to get into Spark or manage a streaming pipeline. If you’re looking for a simpler, serverless way to move and clean your data, AWS Glue is a fantastic option.

Glue is an ETL (Extract, Transform, Load) service that connects easily to AWS data sources like:

- Amazon S3

- Aurora

- Redshift

It also includes Glue Studio, a visual interface that lets you build ETL jobs without writing any code, which is perfect for teams with limited engineering resources or tight deadlines.

AWS Glue is part of the analytics cloud service portfolio that simplifies data engineering, making data more accessible and usable.

Industry connections: Beyond AWS

AWS isn’t the only player in the analytics game, and in some cases, hybrid approaches are common. For instance:

- If your team uses Salesforce, Salesforce Service Cloud Analytics offers native dashboards and visualizations tailored to customer service and CRM data.

- If your environment includes Oracle tools, Oracle Analytics Cloud Service provides robust business intelligence features and works seamlessly with other Oracle cloud platforms.

These services often integrate with or complement AWS workflows, especially in enterprises running multi-cloud or hybrid-cloud strategies.

Why it all matters?

The biggest advantage of collecting and organizing massive datasets is what you can do with them next. With the right infrastructure in place, like warehouses, data lakes, streaming, and ETL, you can build predictive models that help forecast customer behavior, optimize logistics, detect fraud, and much more.

This is where analytics cloud services become so prominent, as with their help, businesses can both understand the past and shape the future.

Machine learning and AI services

Starting with machine learning on AWS doesn’t mean you have to do it from scratch. AWS offers a wide range of tools that help simplify the machine learning journey, whether you’re an expert or just starting out.

As a leading cloud AI ML service provider, AWS continues to expand its portfolio to support everything from training models to deploying production-grade AI systems across industries like healthcare, finance, retail, and beyond.

Start with the Right Data

Every ML project starts with lots of data. If you don’t have enough high-quality data of your own, you can use AWS Data Exchange to purchase or subscribe to curated datasets from third-party providers. These datasets can jumpstart your work in common AI ML use cases such as demand forecasting, fraud detection, and predictive maintenance.

Once your data is in the cloud, AWS makes it easy to store, secure, and prepare it setting the stage for seamless data analytics and AI ML using cloud services and tools.

Train and deploy with SageMaker

Amazon SageMaker is the star of AWS’s machine learning toolkit. It supports popular frameworks like TensorFlow, PyTorch, and Scikit-learn, and allows you to do everything from data preprocessing and training to deployment and monitoring, all in one place.

SageMaker supports a broad spectrum of users:

- Developers and data scientists can use managed Jupyter notebooks and powerful GPU instances.

- Business analysts can use SageMaker Canvas, a no-code interface to build models using a point-and-click approach.

- For anyone needing automation, tools like AutoPilot help automate model tuning and optimization.

SageMaker also powers many AI ML solutions for enterprise customers, giving them flexibility and scale without needing to manage infrastructure directly.

Prefer APIs? Try pre-trained AI services

Not ready to train your own models? No problem. AWS offers a rich set of pre-trained AI services that provide instant access to capabilities like:

- Image and video recognition with Amazon Rekognition

- Chatbots and voice interfaces using Amazon Lex

- Text analysis and sentiment detection via Amazon Comprehend

- Text-to-speech conversion with Amazon Polly

- Speech recognition through Amazon Transcribe

- Translation between languages using Amazon Translate

These services give you access to sophisticated ML features without having to write or train a single model, which is ideal for small teams.

Generative AI and foundation models

As the industry moves toward generative AI, AWS is also stepping up its game. One of the most exciting tools in this area is Amazon Bedrock, a service that provides access to foundation models from top model providers like Anthropic (Claude), Meta, and AI21 Labs, all through a single, managed API.

Pair this with SageMaker JumpStart, which offers pre-built models and templates, and you’ve got everything you need to prototype, customize, and scale advanced AI apps, from chatbots to summarization tools.

These tools reflect how cloud AI ML services are evolving to meet the demands of businesses seeking cutting-edge innovation with minimal setup.

AI labs, consulting, and the human touch

AWS also supports companies through AI ML Consulting and AI ML Lab programs, offering expert guidance, proof-of-concept development, and training to help teams bring AI ideas to life.

Whether you’re a startup testing ideas or a large enterprise seeking cloud AI ML solutions, AWS and its partners provide the expertise and infrastructure to guide you from planning to production.

Security and identity

Beyond computing and storage, developers across all kinds of projects rely on several key tools, especially security and user management.

Identity and Access Management (IAM). This is AWS’s central tool for security. With IAM, you can define who has access to what within your AWS environment. Whether it’s a developer accessing a specific S3 bucket or a service interacting with a database, IAM lets you create fine-grained permissions and roles to keep everything secure and under control.

Amazon Cognito. If you’re building a web or mobile app that includes user login functionality, Cognito is your go-to tool. It handles user authentication (including support for third-party providers like Google, Facebook, and Apple), user management, and session handling, so you don’t have to build all that from scratch.

Infrastructure as Code and frontend integration

Now that you’re familiar with many AWS services, you’ll probably want a more organized and repeatable way to provision your infrastructure, for example, with AWS CloudFormation.

With CloudFormation, you can define your infrastructure as code using YAML or JSON templates. This means you can spin up complex environments, potentially involving hundreds of AWS services, with just a single click or command. It’s a huge time-saver, especially when you want consistency across dev, test, and production environments.

Once your infrastructure is in place, the next step is often connecting it to a front-end application, like a web app, or something on iOS or Android. That is the task for AWS Amplify.

Amplify provides a suite of tools and SDKs that make it easy to connect your front-end apps to AWS services, whether you’re using JavaScript, React, Vue, Next.js, or even mobile frameworks. And recently, Amplify has become even more powerful with enhanced low-code tooling, a visual UI editor for faster app development, and deeper integrations with popular frameworks.

Together, CloudFormation and Amplify give you a powerful combo: one for building your backend infrastructure and the other for connecting it to your users.

What is a software factory?

A software factory is not a physical location. It’s a repeatable, automated, and standardized process for producing software. The idea is borrowed from industrial manufacturing, where factories streamline production to ensure efficiency, quality, and consistency.

In tech, a software factory includes:

- Development tools and platforms (e.g., GitHub, GitLab, Azure DevOps)

- CI/CD pipelines

- Infrastructure-as-Code (IaC) (e.g., Terraform, AWS CloudFormation)

- Testing automation

- Monitoring and security tools

This is like a software assembly line, where code is written, built, tested, deployed, and monitored using pre-defined workflows, often all in the cloud.

What is digital factory software?

Digital factory software takes the software factory concept further by incorporating it into a cloud-native ecosystem and sometimes extending it to business operations and product development too. In this context, it can mean two things:

1. Digital factory for software development (DevOps/DevSecOps). This refers to cloud-based platforms that manage the entire software development lifecycle.

These platforms are often branded as “digital software factories” by cloud service providers, especially in enterprise and government sectors, to highlight their ability to scale secure, agile development across teams.

2. Digital factory for smart manufacturing (Industry 4.0). In industrial settings, digital factory software means a cloud-based platform that models, monitors, and optimizes manufacturing processes. This typically includes:

Tips for new AWS users

Getting started with AWS can feel like walking into a huge supermarket where everything looks useful—but you’re not sure where to begin. Here are some tips to help you get off on the right foot:

Which services to start with? If you’re just exploring AWS, start simple:

- EC2: Launch a virtual machine and get familiar with the core of cloud computing.

- S3: Try uploading and storing files. S3 is foundational for almost every project.

- RDS or DynamoDB: Choose RDS for relational databases, or DynamoDB for NoSQL needs.

- Lambda: A great entry point to serverless computing. Write a simple function and trigger it with a click.

- CloudWatch: Begin tracking performance and logs. It’s key to understanding what’s happening behind the scenes.

Common pitfalls to avoid

1. Forgetting to shut down services. EC2 instances, RDS databases, and even unused EBS volumes can rack up costs.

2. Skipping IAM. Don’t use root credentials for everything. Set up IAM roles and permissions right away.

3. Not understanding pricing. AWS charges by the second or request in some cases. Always check the pricing model of a service before deploying at scale.

4. Overcomplicating the stack. Start simple. Avoid throwing 10 services at a “Hello World” project.

Free-tier options in 2025

AWS still offers a generous free tier in 2025:

- EC2: 750 hours/month of t2.micro or t3.micro instances (for 12 months).

- S3: 5GB of standard storage + 20,000 GET and 2,000 PUT requests.

- Lambda: 1 million requests and 400,000 GB-seconds of compute time per month.

- RDS: 750 hours of usage for db.t2.micro instances, with 20GB of storage.

- CloudWatch: Basic monitoring with 10 custom metrics and 5GB of log ingestion.

These free-tier offerings are perfect for experimentation and small MVPs.

Content

- 1 AWS for robotics, IoT, and space

- 2 Quantum and future tech in the cloud: Amazon Braket

- 3 Core compute services

- 4 Going serverless

- 5 Container management

- 6 Cloud storage solutions

- 7 Database services

- 8 Analytics and big data

- 9 Machine learning and AI services

- 10 Security and identity

- 11 Infrastructure as Code and frontend integration

- 12 What is a software factory?

- 13 Tips for new AWS users