Software development trends don’t come and go with the beginning of the new year. They’ve been formed over decades, and what you saw yesterday will continue forming the stage the day after tomorrow. Let’s see what technologies will rule the world in 2024 and which of them, hopefully, can change the world for the better. Here’s a glimpse at what we should be expecting from 2024 and how new technologies will affect the way we search and hire IT specialists.

Content:

- What do we take into 2024 with us?

- The limits we’re going to face – the end of Moore’s law

- 5G and its limitations

- Satellite network

- Switch from making a profit to returning investments

- Making your code mobile-friendly

- Web 3.0

- Containerization

- How do you make money as a software developer in 2024?

- AI

- AR and VR

- Quantum computing

- Summing up

What do we take into 2024 with us?

First, let’s go through some obvious things that are happening today and will settle the future of technology, at least for a couple of years.

The cloud

Clearly, the cloud’s rise to prominence has been significantly accelerated due to the 2019-2020 pandemic. What was anticipated to be a multi-year journey towards cloud migration occurred in just a few months.

Mobiles

Mobile devices currently enjoy peak popularity, reigning as the most widely used computing devices globally. The statistics are telling: there are more mobile phones than adults in the world. With nearly 15 billion mobile devices in operation, it’s essential for developers to ensure every bit of their code is optimized for mobile use. Today, our mobile phones have become our digital appendages. To truly understand this, try leaving your phone at home when you head to the office. The sense of missing something vital will be palpable. Discussing the future of computing technology and software development without considering the evolution of mobile phones would be incomplete.

Big data

Another technology that defines our present is big data. It emerges as a byproduct of the extensive use of mobile devices and social media, aggregating vast amounts of information into a single repository. This wealth of big data fuels the development and operation of machine learning models, which learn and adapt based on this data. As of recent years, the volume of data generated daily is estimated to be in the exabytes, indicating a massive potential for insights and advancements.

These are all obvious things – more devices, more cloud, and more data. What are the unexpected things?

Source: The New Yorker

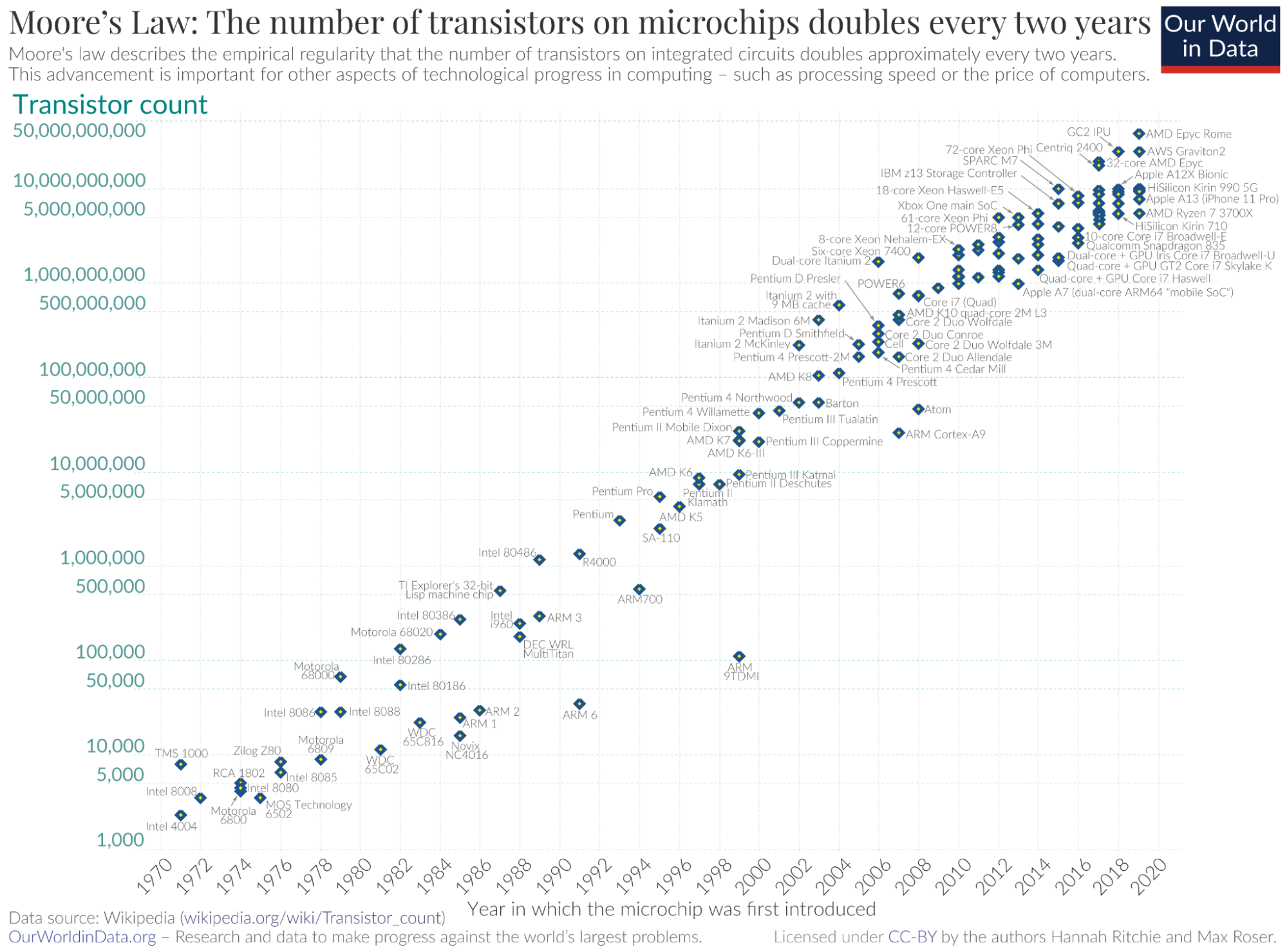

The limits we’re going to face – the end of Moore’s law

Moore’s Law, a cornerstone prediction in technology, posits that the number of transistors on a microchip (a critical component of computers) will approximately double every two years. This trend suggests that electronic devices like computers and phones become faster, more compact, and more cost-effective at a steady pace. Consequently, we can anticipate our gadgets becoming increasingly powerful every few years, often without a significant increase in price. This phenomenon has been instrumental in evolving computers from large, costly units with limited functions to the remarkably small, affordable, and powerful devices we carry in our pockets today. Recent statistics indicate an exponential increase in computing power, with today’s smartphones being more powerful than the entire NASA computing infrastructure during the Apollo missions.

However, Moore’s Law is encountering physical limitations. As transistors, which are the tiny switches processing information in electronic devices, continue to be miniaturized and densely packed onto microchips, we’re nearing the threshold of how small these components can feasibly be made.

So, what do we do now? Today, the industry is looking at alternative ways to improve computing power. The industry is exploring new ways, such as the development of new materials, the adoption of 3D chip architectures, and the advancement of cutting-edge fields like quantum computing and neuromorphic computing. The latter, inspired by the human brain’s efficiency and processing power, represents a significant leap in computational design.

These technologies are viewed as key alternatives to continue increasing computing power beyond the limitations of Moore’s Law. However, as of 2024, it may be prudent to recalibrate our expectations regarding the exponential growth of computing capabilities. While these new approaches hold promise, they are still in developmental stages. Recent trends indicate a gradual shift in focus from sheer processing speed to energy efficiency and specialized computing, acknowledging the need for sustainable and targeted technological advancement in an era where Moore’s Law’s exponential trajectory is no longer feasible.

5G and its limitations

The development of 5G and the ongoing research into 6G represent huge leaps in telecommunications technology. It offers a big increase in data density, meaning incredible speed of data moving between points of computing. What else?

- 5G is potentially up to 100 times faster than 4G.

- It reduces latency to just a few milliseconds, facilitating real-time data processing.

- 5G can support a higher number of connected devices simultaneously, which is essential for the IoT.

- 5G networks are more efficient for energy and spectrum usage, they are more sustainable and capable of serving denser urban areas.

However, as for today, it comes with a limitation. Due to the increase in data density, it can’t penetrate physical obstacles! With 2G, you could use your mobile in the basement, and it would work, but it is not like that with 5G.

Why do 5G limitations occur?

Think of a 5G connection (or any wireless signal) like sound. When you speak softly (which is like having a low data density), your voice can travel pretty far, but it might be hard for someone to understand all the details of what you’re saying from a distance. Now, if you speak loudly and clearly (which is like having a high data density), someone close to you can hear and understand you very well because there’s a lot of detail in your voice.

However, if there’s something like a wall (a physical obstacle) in between, it’s harder for the loud and detailed sound to get through. The wall absorbs or blocks more of the sound, so less of it gets to the other side. With the softer sound, it doesn’t carry as much detail, so even though it’s fainter on the other side of the wall, it can sometimes travel a bit better through obstacles.

In the case of 5G, it transmits a lot of data (like the loud and detailed voice), so it’s great at giving you fast internet and lots of information quickly when there’s a clear path. But, like the loud voice, it has a harder time getting through obstacles like walls, trees, or other physical barriers because they absorb or block more of the detailed signal. That’s why 5G can have issues with penetration and why things like walls can become obstacles to the connection.

So, even though 5G is crucial for the development of other technologies, we’re still dealing with the penetration issue. Unfortunately, there doesn’t seem to be a simple solution, and the network will more likely be a constraint for software developers in 2024.

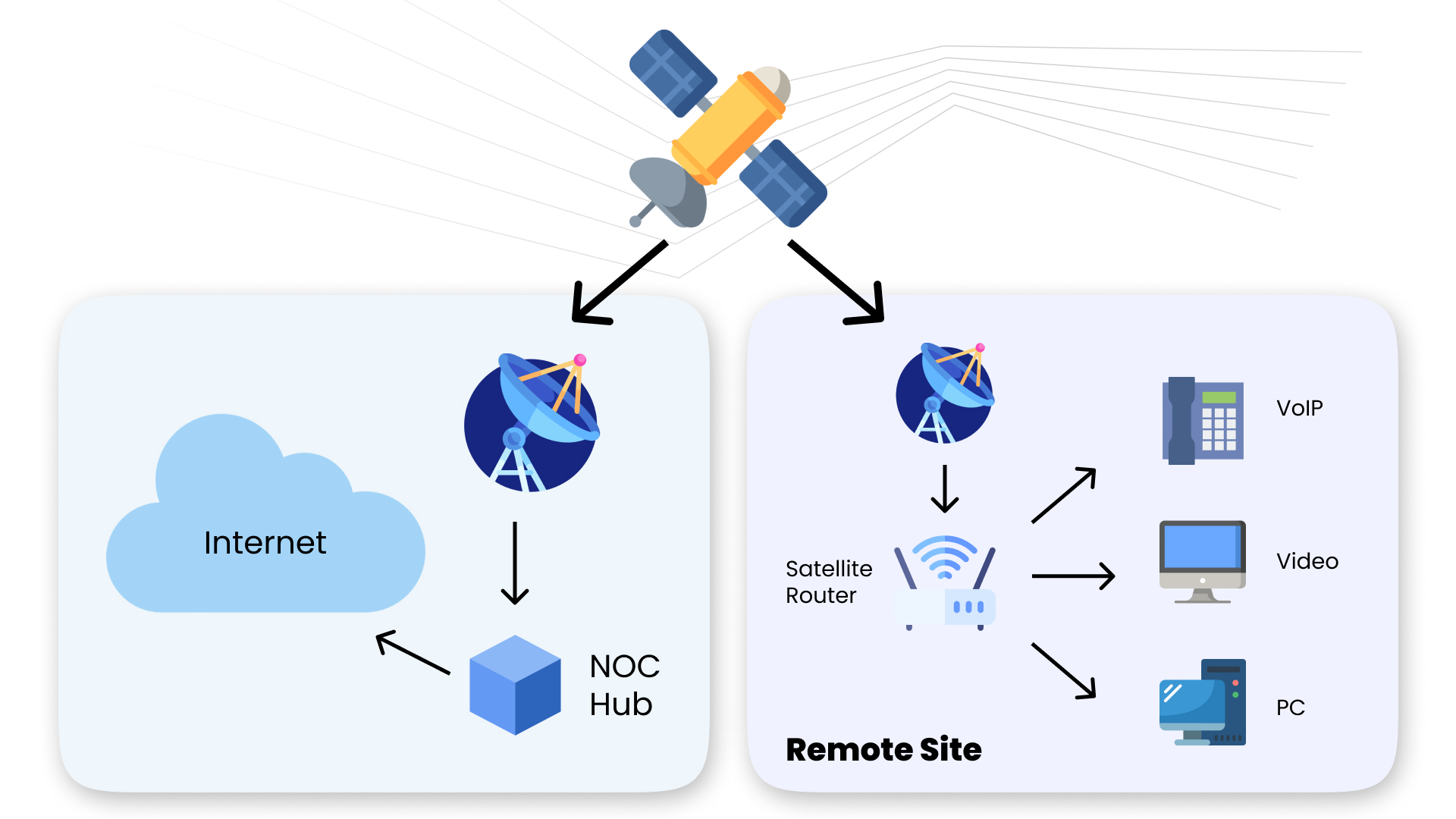

Satellite network

Satellite networks, like Starlink, can provide internet access in remote and rural areas where traditional broadband infrastructure is not feasible or too expensive to deploy. It can also be used in disaster-stricken areas where ground infrastructure is damaged. Widespread satellite networks can bridge the digital divide, allowing more people access to online education, healthcare services, and digital economies.

We hope that the emergence of satellite internet providers like Starlink will foster competition, leading to improved services and lower costs in the broadband market. With more people online, there’s a larger market for digital products and services, and there are more opportunities for people all around the globe to offer their digital services to customers on other continents. In essence, the trend toward satellite internet is not just about connectivity; it’s about unlocking global potential.

While satellite internet promises global coverage, its performance in terms of speed and latency, particularly in comparison to fiber optics, is a point of discussion. Another point to consider is that satellite networks must navigate complex international regulatory frameworks and orbital space management. The increasing number of satellites means more space debris and environmental impact, which should also be taken into consideration.

Switch from making a profit to returning investments

The pandemic has brought us extra-fast cloud migration and remote work as a new normal, but it also had an economic impact. 2024 is quite possibly going to be a year of global economic recession. The last time we witnessed such a downfall was in 2008-2009, but back then, the IT sphere wasn’t much affected. So, because of almost 20 years of constant growth, IT companies feel quite uncomfortable with the new economic reality. They fire people in large numbers, they cut R&D departments if they’re unsure their investments will return, and they try to use AI instead of people.

This situation brings us to the essence of intellectual technologies and their role in society: We are productivity amplifiers. We help companies survive during tough times. And this is what we will do in 2024.

We all try to save money, and if your company is no exception, consider outsourcing and staff augmentation as ways to lower your expenses.

Let’s sum it up a bit. We live in a cloud world and mostly use smartphones. We cannot count on new computing going much faster, and in general, companies and customers are really tight with their cash. So, what are our opportunities for growth?

Making your code mobile-friendly

Today’s users expect software to be universally compatible and function seamlessly across all devices and browsers. This demand presents a significant challenge, as the era when developers could code software to run specifically on the device they were using to write this code has long passed – a shift that’s been evident for over two decades.

The current state requires software to be adaptable and responsive across a dozen platforms, from smartphones to laptops, all with their own operating systems and browser configurations. We can state that there is a diversity in user preferences, with a broad spectrum of devices and browsers in use globally. Because of this fact, we need a more complex and nuanced approach to software development, where adaptability and cross-platform functionality are pivotal. It’s a far cry from the simpler times when software was tailored to a single, specific environment.

Progressive web app

What do we have now? There is a market of browsers that includes Chrome, Safari, Edge, and Firefox, and you have to make sure that your web app works perfectly on each of them. The progressive web app (PWA) movement is all about making websites behave more like apps on your phone. Imagine you visit a website on your phone or computer, and it feels just like you’re using an app. It’s fast, can work without a strong internet connection, and even sends you notifications like a regular app. That’s what a progressive web app is.

Web Assembly

Web Assembly is a complementary technology that can enhance the capabilities of PWAs and other web applications. WebAssembly is a low-level, binary instruction format that enables code written in languages like C, C++, and Rust to run on the web at near-native speed. Since it’s a compiled binary format, it’s more efficient and faster than JavaScript for certain types of tasks, particularly those requiring intensive computation, like graphics rendering, video processing, or complex calculations.

So, with Web Assembly, you can program on the languages of choice and not only JS. You get all the benefits of web development, but you use the language you want to use. This is the area of growth for the smartest people in the industry

Web 3.0

Much of the Web 3.0 discussion revolves around blockchain and cryptocurrencies, which, while innovative, have been subject to market volatility and regulatory scrutiny. Many possible applications of Web 3.0 technologies are still in experimental stages, with real-world effectiveness and adoption yet to be proven on a large scale.

Decentralization

The decentralized web is the most reasonable aspect of Web 3. In the 1990s, the early internet embraced a form of decentralization that was dynamic and adaptable, but it lacked dependability. Web 2 is more centralized – we gave up our ownership of devices on the internet for service providers like Facebook.

So, now we are again moving to decentralization, except now we want to do it the better way. Shopify is a good example of decentralization, as it gives you an engine for a fee, but it doesn’t compete with you. This model exemplifies the new era of decentralization, where the focus is on empowering users with the tools and autonomy to control their digital presence, while also ensuring reliability and user-friendly experiences. The evolution towards this balanced form of decentralization reflects a growing desire for both freedom and stability.

Blockchain

Blockchain, on the other hand, is widely misapplied. It is so easy to exploit that we fairly destructed its purpose. Blockchain faces significant scalability challenges, which need to be addressed for wide adoption. What’s more, the market around Web 3.0 technologies, especially cryptocurrencies and NFTs, has seen speculative investments, leading some to question the long-term value of these technologies.

The regulatory landscape for decentralized technologies is still uncertain, which adds to the skepticism regarding their long-term viability and integration into mainstream use. Some argue that the changes attributed to Web 3.0 are more evolutionary than revolutionary. They see it as a natural web progression rather than a distinct, transformative era.

The exploration of new ways to use the internet takes time. Your choice is to jump in and become a part of this exploration or witness it, see what pieces are left, and then make your own move.

Containerization

Think of containers as individual, sealed packages. Each package (container) has its own application, along with everything that the application needs to run, like libraries and settings. This is different from traditional setups where applications might share these resources on a single operating system. How does this setup enhance security?

- Isolation. Since each container is isolated from others, it’s like having applications in separate rooms with locked doors. If one application is compromised, the problem doesn’t easily spread to the others.

- Limited access. Containers can be set up to limit how much of the host system (the overall environment they’re running in) they can access. It’s like each application only being able to use certain parts of a building, reducing the risk of one compromised application affecting the entire system.

- Consistent environments. Containers are often used to create consistent environments across development, testing, and production. This consistency means there are fewer differences that could lead to unexpected security issues.

- Smaller attack surface. Containers usually have just what they need and nothing more. This minimalism means there’s less in each container that could be attacked, making it a smaller target for attackers.

- Easier updates and patches. When a security flaw is found, it’s easier to fix it in a containerized environment. You can update just the affected container without risking changes to the entire system.

In simple terms, containerization improves security by keeping applications separate and self-contained, limiting what parts of the system they can access, reducing the number of vulnerable spots, and making it easier to apply security updates.

As containerization is a consistent way over the industry to secure you from ransack attacks, some cyber insurance companies can even refrain from insuring your app if you don’t use containerization – that’s how far the trend goes.

How do you make money as a software developer in 2024?

Making money as a software developer involves more than just understanding programming languages or the latest technologies. It’s about strategically positioning yourself in the market, continuously adapting your skills, and often, finding a niche where you can excel and provide unique value.

New technologies emerge constantly; however, the key to financial success isn’t necessarily to chase after every new trend. Instead, it’s important to critically assess whether these new technologies serve a practical purpose that the current technologies cannot fulfill.

- For example, consider the rise of cloud computing. Developers who recognized early on that traditional on-premises solutions were becoming insufficient for the growing data and scalability needs of businesses and thus shifted their focus to cloud platforms were able to capitalize on this shift. They developed expertise in cloud services like AWS, Azure, or Google Cloud, which in turn made them highly valuable in a market where more and more companies were moving towards cloud solutions.

- Another aspect to consider is specialization. In the vast field of software development, carving out a niche for yourself can be a highly effective strategy. This could mean focusing on a particular programming language that is in high demand but has a limited supply of experts. For instance, although JavaScript is widely used, specializing in a framework like React or Angular can set you apart.

- Expanding your space within your niche is also crucial. This means not only being proficient in coding but also understanding the business or industry you’re serving. A developer who understands the specific challenges and goals of a business sector can offer more tailored solutions, making themselves an indispensable asset.

- Moreover, with the growing emphasis on data security and privacy, developers with skills in cybersecurity and data protection can find lucrative opportunities. As businesses become more aware of the risks of data breaches and cyber-attacks, they are willing to invest in developers who can build secure applications.

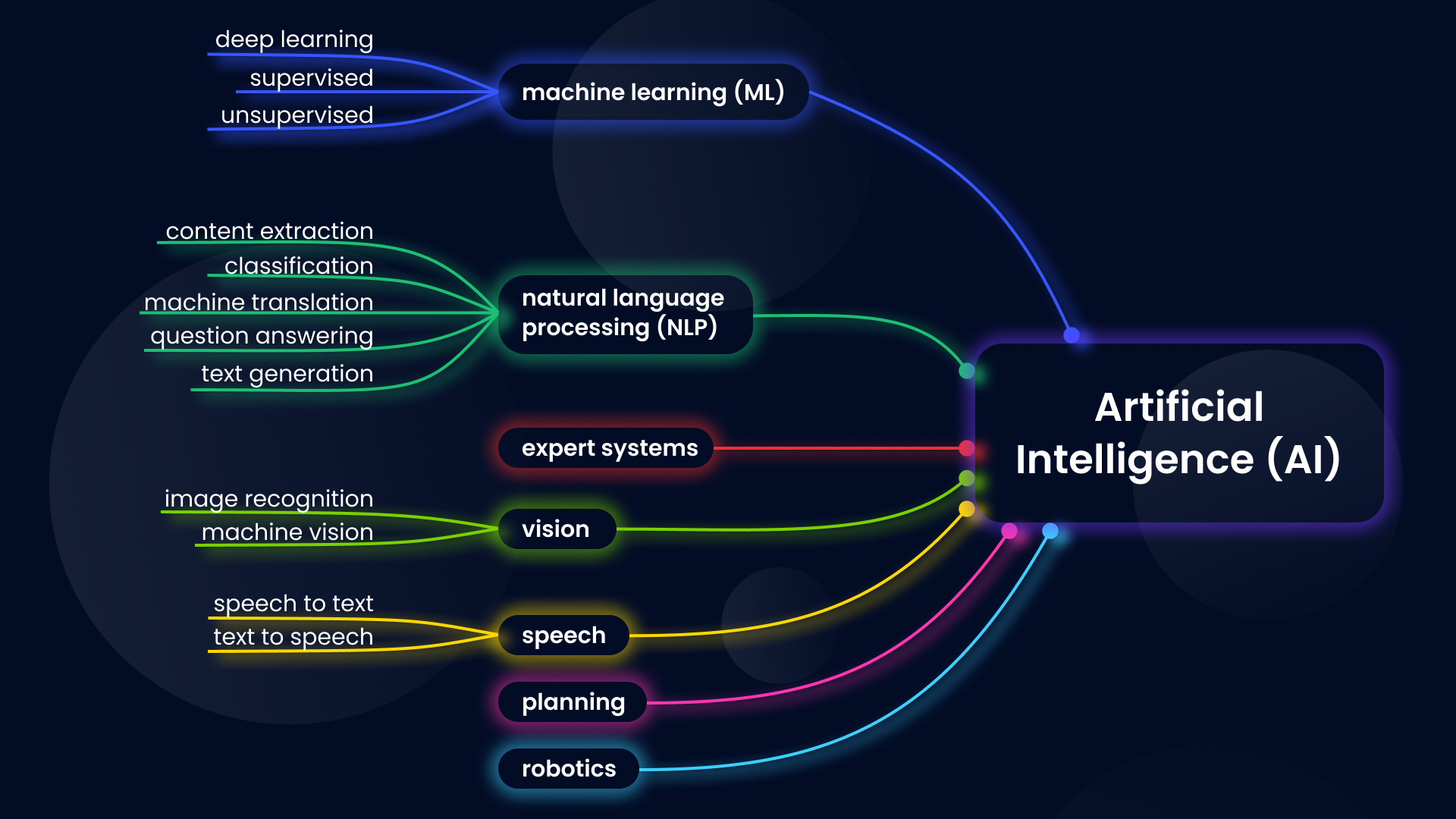

AI

AI is an umbrella term. We call a technology an AI when it doesn’t work. When it does work, it gets a new name, like deep learning or machine vision.

A bit of history

Here’s a quick history of AI development over 70 years.

-

- 1950s: The concept of AI is formalized by John McCarthy and others. The term “Artificial Intelligence” is introduced in 1955.

- 1956: The Dartmouth Conference marks the birth of AI as a research field.

- 1961: IBM introduces the IBM 704, capable of early text-to-speech synthesis.

- 1960s: Development of LiDAR technology begins. The first industrial robot, Unimate, is employed in a General Motors assembly line.

- 1968: Kubrick’s 2001: A Space Odyssey was released, starting the era of fears of artificial intelligence supremacy.

-

- 1970s: LiDAR technology advances. AI research delves into rule-based systems, leading to the first expert systems.

- 1980s: The field of Natural Language Processing (NLP) begins to flourish, focusing on machine translation and text understanding. AI winter starts due to unmet expectations.

- 1984: The Terminator franchise started.

- 1990s: The second AI winter ends. IBM’s Deep Blue beats world chess champion Garry Kasparov. Machine learning, focusing on data-driven approaches, has gained prominence.

- 2000s: Text-to-speech technology becomes more mainstream. The field of robotics advances with autonomous drones and exploration rovers.

- 2010s: AI experiences a resurgence due to advancements in deep learning. Google’s AlphaGo defeats world Go champion Lee Sedol. LiDAR becomes crucial in autonomous vehicle development. AI in healthcare starts making significant contributions, particularly in diagnostics. Siri is released first as a standalone app for iOS and is then integrated into the iPhone as part of iOS 5 and debuted on the iPhone 4S in October 2011.

- 2020s: AI continues to grow, integrating into various sectors. Advancements in NLP lead to sophisticated language models like GPT-3. Quantum computing starts to intersect with AI, promising future breakthroughs.

Data analysis

What should your kids start learning if you want them to earn money in the future? It’s data analysis. Data analysis is an emerging opportunity because there is so much of it! We’re simply outnumbered by all the data existing, and even though we have better tools than ever for data analysis, we still need more people.

Predictive analysis is also at its peak, as some modern tools can write scenarios beyond predictions. You cycle your models with actions, and you get a prescriptive analysis model. For example, when you get an email from the company reminding you that you didn’t bough stuff you put into the basket or offering you a discount, be sure that these are all automated emails. The prescriptive analytic model tries to figure out what is the best time and density of emails and what is the response rate for every particular emailing scenario.

GitHub Copilot

GitHub Copilot is like a smart assistant for writing computer code. It works with a programmer, suggesting lines of code or even whole chunks of code to help them write programs faster and more efficiently. It’s a bit like auto-complete on your phone, where it predicts what you’re going to type next, but for coding. GitHub Copilot learns from a huge amount of code that it has seen before, so it can give suggestions that make sense for what the programmer is trying to do. This tool can be really helpful, especially for speeding up the coding process and helping with coding ideas.

Is it secure? Is it reliable? It’s only as good as the code you put on the GitHub before. Copilot is a massive ML model for programmers and a great accelerator. There’s simply no reason why developers should avoid it. Will it replace developers? Not in the near future.

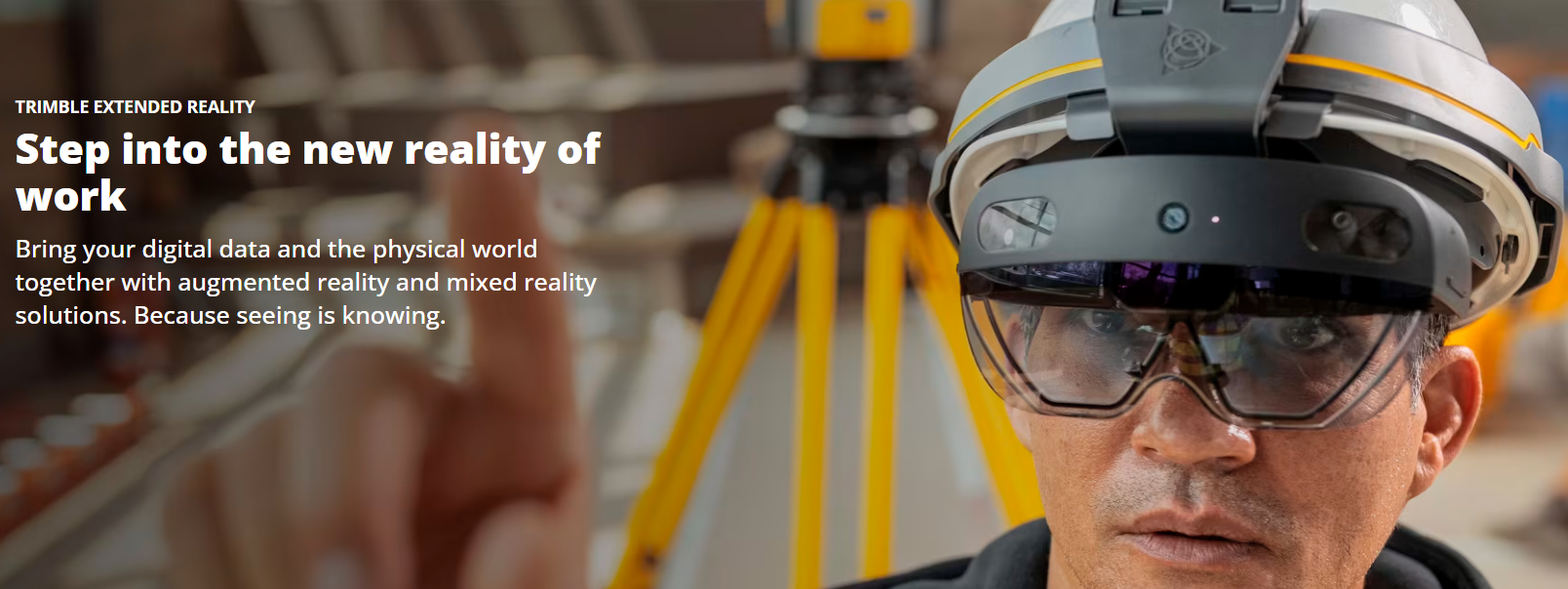

AR and VR

The photo below is a mockup. Apple invested tons of money into AR glasses development but didn’t come up with a device. They were not the only ones, as Meta has also lost billions of dollars trying to create a VR-based Metaverse. 2023 was a dark year of AR and VR, and the chances are high that this will stay this way in 2024.

What can change, though?

AR/VR glasses, lenses, etc, are quite expensive and will not become consumer devices anytime soon. However, some industries believe AR and holo lenses to be perspective and potentially beneficial technologies for the business. For example, companies like Trimble try to take advantage of AR solutions for construction projects.

Quantum computing

Quantum computing is a type of computing that uses the principles of quantum mechanics, a fundamental theory in physics that describes the behavior of energy and matter at atomic and subatomic levels. It represents a significant shift from classical computing, which is based on the binary system of bits (0s and 1s) and offers qubits instead.

Unlike classical bits, quantum bits or qubits can exist in multiple states simultaneously (thanks to superposition). This allows quantum computers to process a vast amount of information at a much faster rate than classical computers. Quantum computing is probabilistic rather than deterministic, meaning it provides probabilities of an outcome rather than a definite answer, which is a fundamental change from classical computing.

What do we have today?

IBM has been a pioneer in quantum computing, launching its IBM Q Experience in 2016 to provide public access to its quantum processors and introducing various quantum processors over the years. In November 2021, IBM unveiled its 127-qubit quantum processor called ‘Eagle’. This processor marked a significant step forward in the quantum computing field due to its ability to handle a larger number of qubits, which are the basic units of quantum computing.

Before that, in 2019, Google announced that it had achieved quantum supremacy. This means their quantum computer, Sycamore, performed a specific task in 200 seconds that would take the world’s most powerful supercomputer 10,000 years to complete.

There is also Intel with its silicon-based quantum computing with a 49-qubit quantum chip, “Tangle Lake,” released in 2018. There’s also a Quantum Development Kit by Microsoft, which was released in 2017.

What’s going to happen to quantum computing in 2024?

Some believe that it was Thomas J. Watson, the Chairman of IBM, who said in 1943: “I think there is a world market for maybe five computers.” What we want to say is that even though many people think there is no market for quantum computing and we don’t have problems as massive as qubit possibilities, they might be wrong.

We can use quantum computing for so many purposes, including the growth of land capacity or saving lives during natural disasters. We will find a way to use quantum computers. Alas, at the moment, there are still problems making the right qubit.

Summing up

The software development and technology trends above will affect the IT labor market and the way companies hire engineers in 2024.

- For sure, there is going to be a higher demand for particular specialists, namely cybersecurity engineers, ML developers, and big data analysts.

- The recruitment process itself will be affected by AI. Today, we have tools that speed up screening and help you make better, unbiased choices for a candidate.

- Because of the political instability around the globe, companies might want to have employees worldwide and in the safest places on the planet.

- Economic downfall will force some companies to decrease their expenses on risky technologies and invest more in tools that help businesses save money.

- Employers will demand more from their applicants for less money.

But, the future can also be absolutely not the way we imagine it. Just think of the Covid pandemic once again. Would you ever believe, back in 2019, that we would all go on the cloud in a matter of weeks instead of years? Or think of that guy at IBM who was sure there was no market for personal computers. Could he ever have imagined that in 50 years, every kid will have a stronger computer than NASA once had? And they will play Candy Crush on it.

Our article is not a prediction. It’s a list of things that might happen to us with higher probabilities than other things. Let’s hope for the best and do what we can to make this world a better, more innovative place.

Content

- 1 What do we take into 2024 with us?

- 2 The limits we’re going to face – the end of Moore’s law

- 3 5G and its limitations

- 4 Satellite network

- 5 Switch from making a profit to returning investments

- 6 Making your code mobile-friendly

- 7 Web 3.0

- 8 Containerization

- 9 How do you make money as a software developer in 2024?

- 10 AI

- 11 AR and VR

- 12 Quantum computing

- 13 Summing up